(THIS ARTICLE IS MACHINE TRANSLATED by Google from Norwegian)

Technology is inherently neutral and can be used for the good of all or to create profit for the few. Technology can make life easier, but it can also have downsides. It is important to be one step ahead in regulations, since the development of technology is inscrutable and sometimes takes great leaps. We must have guidelines that also make the people behind the use responsible. As it is today, countries can kill freely with drones, without anyone being held accountable. It is possible to be aware of the technology around us and not let it control us completely, but still think for ourselves.

Consumption and drones

"Poor is not the one who has little, but the one who needs an infinite amount, and wants more and more", said José Mujica, president of Uruguay 2010-2015, in his speech during the UN Rio+20 conference in 2012.

With good help from the advertising industry and the flood of information, needs are created. In some parts of the world, you can afford to overconsume. In others, not at all. It's almost as if our part of the world has ADHD. We throw ourselves into the new, without full concentration or big, broad-minded perspectives. And we're running at full speed. Most people know deep down that this affects the environment. Norwegians supposedly have 359 items of clothing on average, according to the report Clothing and the environment: Purchase, reuse and washing by SIFO researchers Ingun Grimstad Klepp and Kirsi Laitala (2020). The average Norwegian buys 23 items of clothing a year. Every fifth garment is never used... Worldwide, the textile industry accounts for approx. ten percent of all mothball gas emissions.

With all the amazing technology we have, you'd think we'd use less energy unless we also want to have material growth all the time. Something is happening

fortunately, as the energy efficiency of houses and homes – but at the same time we acquire more and more things that use electricity. We kind of have to have more and more, since it's available.

Technology evolves, often for the better, but also for the worse. There are few rules and restrictions. The use of drones in war is hardly anyone's surprise anymore, even though it is a perverse way of waging war, where the drone operators sit in safe bunkers and can shoot down any possible terrorists on the other side of the globe. The Bureau of Investigative Journalism (BIJ) estimates that US drones in the period 2002–2020 have killed between 10 and 000 people, of which between 17 and 000 civilians. Between 800 and 1750 children have been killed. In 283, one drone in Afghanistan killed ten people, among them a father with his seven children. This received a lot of attention, but is far from an isolated case or any record. It is worth noting that absolutely no one is held accountable for such war crimes.

Robot dogs with flamethrowers

A few years ago, robot dogs came, or quadrupel robots (four-legged robots). They should be able to be used where it was too difficult and dangerous for people to move. Soon after came military versions, where the 'dog' could carry luggage. The police in New York purchased Digidog from Boston Dynamics – two robot dogs. For the time being, they will not patrol the streets, but will help in vulnerable situations. The city's fire service has also ordered two. Boston Dynamics' dogs must not be used to harm people. However, other similar robot dogs, such as Thermonator, can. There is a version with a flamethrower. It must be versatile and sensible. The 'dog' Unitree Go1 (Air and Pro) costs between US$2700 and US$3500 each and US$1000 in shipping. Thermonator can bring on-demand-fire anywhere, according to the company. Not entirely unexpectedly, you can also buy flamethrower drones, for the outrageous sum of 1499 dollars. In 2021, Ghost Robotics launched a "Special Purpose Unmanned Rifle robot dog", equipped with a 6.5mm Creedmoor rifle. The US military has been using robot dogs since 2020, including from Ghost Robotics, which has not set restrictions on arming the robots.

In 2021, one drone in Afghanistan killed ten people, among them a father with his seven children.

In itself, it is not wrong to make either drones or robot dogs. They can have peaceful and life-saving properties. But they can be abused and used as weapons. That is why – of all – some of the robot manufacturers have come out with a call and condemnation that their robots should be able to be used with and as weapons. Previously, Boston Dynamics was the first to come out and concludes an open letter from October 2022 to, among others, the robotics industry as follows: "We understand that our commitment alone is not enough to fully manage these risks, and therefore we ask politicians to work with us to promote the safe use of these robots and prohibit their misuse. We also call on all organizations, developers, researchers and users in the robotics community to make similar pledges not to build, authorize, support or enable the attachment of weapons to such robots. We are convinced that the benefits to humanity of these technologies greatly outweigh the risks of misuse, and we are excited about a bright future where humans and robots work side by side to tackle some of the world's challenges.”

The weaponization of drones and robots, or autonomous weapons, was up for grabs in the UN Convention on Certain Conventional Weapons (CCW) in December 2021, but no consensus was reached. The ongoing war in Europe is unlikely to speed up such a new discussion, quite the opposite. Some say love is blind, but it sure seems like war is too.

The use of killer robots also erases responsibility. Who should and can be punished if a robot kills children or other innocents in a war situation? If we think a little further, such weapons can be used by terrorists and other individuals and groups. To think otherwise is blue-eyed, and with prices down to $1500, such a robot is probably a good investment for them. There are certainly some protests, including from the NGO Stop Killer Robots and recently also from the UN

Secretary-General António Gueterres and A New Agenda for Peace. On 20 July this year, Gueterres urged all states to adopt a legally binding treaty that prohibits and regulates autonomous weapons systems by 2026. Thoughts go to the cluster bomb treaty, the Convention on Cluster Munitions, which Norway has also signed along with over a hundred other nations – but not countries such as Russia, China, Ukraine or the USA...

Bill Gates and GMOs

Technology can be amazing. Just think of how the telephone connected people in its day – as long as it was fixed and wired. Now, with mobile

the phone, it's almost the opposite. We are more accessible, but at the same time also more distant, disturbed by all the possibilities that mobile phones and the internet offer us. We crave so much 'connection' that we completely lose track. One would think that almost unlimited access to information via the internet would make us smarter and wiser, but is this the case?

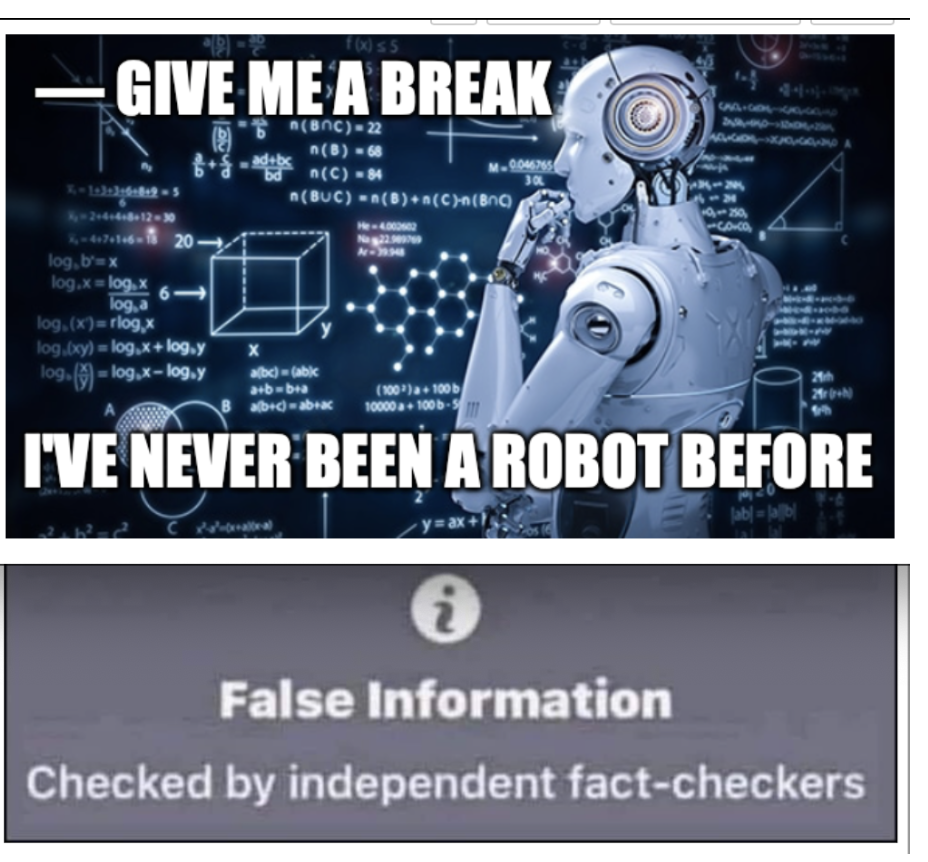

Recent was Bill Gates in Kenya and attended a meeting at the University of Nairobi. Here he claimed that all the corn and wheat bread he had ever eaten had been genetically modified: "Every piece of bread I've ever eaten is from genetically modified wheat, every piece of corn I've eaten is GMO.» Genetic modification was not allowed in the United States until 1994, and GMO corn not available until 1996. The world's first genetically modified wheat was approved in 2020 in Argentina and allowed to be used in the United States only in 2022. Bill Gates was born in 1955. A fact-checking agency was on the pitch to clarify the correctness of one of the questions, including in a Twitter message. They even refer to Bill Gates' statement without any further comment on it. This is so basic that it's hard to believe that no one has caught Bill Gates in his insane lie. What other purpose than propaganda can such a claim have? It is also almost unthinkable that Bill Gates was not aware that this was pure fabrication. What's the point of fact-checkers if they're so hild? Or unaware of what they are checking. Fact-checkers get much of their funding precisely from Bill Gates and his Foundation, whether it's Poynter, AfricaCheck or the well-respected Columbia Journalism Review. They also describe why very few major media publications would dare to criticize one of their biggest sponsors. Bill Gates' hopeless statement was widely reproduced by the press in Kenya, and his claim, which is flatly wrong, is quoted without contradiction. Even French Le Monde quotes this lie, and everywhere the fact-checkers are conspicuous by their absence. When no one has reacted to this monstrous claim, I really wonder what has happened to the memory and knowledge of people in general, and not least to journalists and fact-checkers, who you would think are the slightly sharper knives in the drawer. All the information is there, but few dive into it.

Attention decreases. In 2004, the attention span for screen use was two and a half minutes. In 2012, it was 75 seconds, i.e. half. Now it's down to 47 seconds or less. This is claimed by Professor Gloria Mark, who has studied this for a number of years at the University of California in Irvine. Recently she published the book Attention Span: A Groundbreaking Way to Restore Balance, Happiness and Productivity. One can slowly wonder if it is the extensive use of screen products, whether it is TV, computer or mobile, that dumbs us down and lowers concentration. Children between the ages of two and four have an average screen time of two and a half hours a day, while five to eight year olds have three hours, according to her. She warns against extensive screen use among children and emphasizes that it can reduce the ability to concentrate. Instead, she suggests low-tech solutions such as playing outside and reading books.

Artificial intelligence

The quantum computer is coming. It is advanced and solves problems and tasks in a far more complex, faster and more sophisticated way than today's computers. It will give us unprecedented odds to solve hitherto unsolvable tasks and create huge, intricate models. At the same time, its capacity also gives the opportunity to crack all kinds of known encryptions and codes, and thus – if it is misused in this way – all private information is available. Fortunately, it is still some way ahead in time, and work is being done on alternative encryption methods.

The US military has been using robotic dogs since 2020.

Recently, human-like AI (artificial intelligence) robots told a UN summit that they could run the world better than us humans. The meeting had the designation AI for Good - Global Summit 2023 and was held in Geneva on 6–7 July. They, the robots, also admitted that they had no strings attached to human emotions, but were also not bound by such or other human weaknesses – but without reflecting on the fact that previously used AI has proven to be both sexist and sexist as well as racist.

A little later, on July 21, executives from seven different leading AI companies came together – OpenAI, Amazon, Anthropic, Google, Inflection, Meta and Microsoft. They met with President Joe Biden to set up voluntary security rules. The companies promised, albeit in vague terms, measures such as releasing information about security testing, sharing research with academics and authorities, reporting 'vulnerabilities' in their systems and working with mechanisms (watermarks) which informs when content is AI-generated. Certain companies have already integrated some of this. Since these security measures are voluntary, it may not be much to shout about, but at least it shows that it's on the agenda. Such voluntary measures also provide room for the companies to continue their business as usual under the guise of taking the risks seriously, without being bound by real obligations.

It is obvious that we need regulations when it comes to technology, robots and AI, and it is urgent. Many have issued strong warnings that AI poses an existential threat to humanity. In May, 350 technology leaders and researchers published a very short statement. "Reducing the risk of extinction from AI should be a global priority along with other societal risks such as pandemics and nuclear war."

Others such as Richard Buckminster Fuller and Jacque Fresco have had great faith in technology as a means of helping humanity away from meaningless work. Perhaps extensive use of AI in governing bodies can even out the big differences between rich and poor? That would be ideal, but in a capitalist system the drive is not for people to work less and consume less. Reality dictates that it is therefore extremely necessary to create regulations that are followed, before an angry neighbor fires up his flamethrower drone, or before AI becomes independent enough to develop its own agendas.